The Report

1.3 Limitations

Our results have limitations and opportunities for improvement that need to be recognized. First, our search concentrated on media written in the English language. Thus, there is an explicit bias against programs that are not originally written in that language or have not been translated. Although we have undoubtedly missed important documents, our efforts to increase the diversity of programs included the use of free online translation tools (Google Translate) for non-English AI soft law programs found through our identification protocol.

Second, programs were identified, labeled, and reviewed by several individuals. Despite this, there is little doubt that important soft law programs or their characteristics could have been misunderstood, leading to mislabeling or erroneous exclusion from our analysis.

Third, the universe of soft law programs is rapidly expanding. As seen in this report, 42% of soft law examples in our sample were created in 2019. Despite the effects of Covid-19, we would not be surprised if 2020 represented an important year in the development of new soft law programs related to AI. Unfortunately, our research effort is a mere snapshot of AI Governance until 2019. In excluding programs created in 2020 and beyond, we have limited our perspective into the governance ecosystem of this technology.

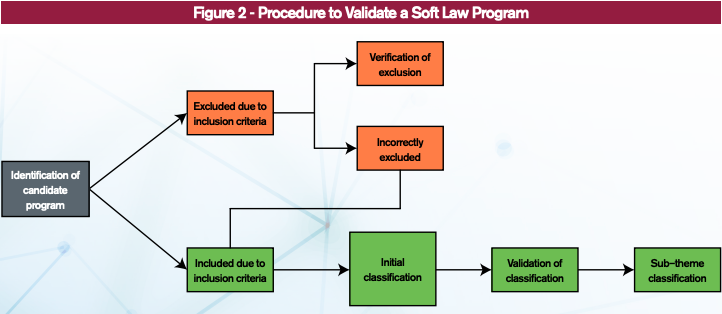

In an effort to minimize the limitations mentioned above, this project validated each program’s information several times. Figure 2 is an illustration of the steps taken to analyze our data, each box represents an individual pair of eyes. In the shortest case scenario, three individuals confirmed the exclusion of a program. Conversely, between five and six individuals evaluated the characteristics of every screened-in program. Although we know that this procedure is not infallible, our efforts were geared towards maximizing the reliability of information presented to our community of stakeholders.