The Report

2.7 Themes

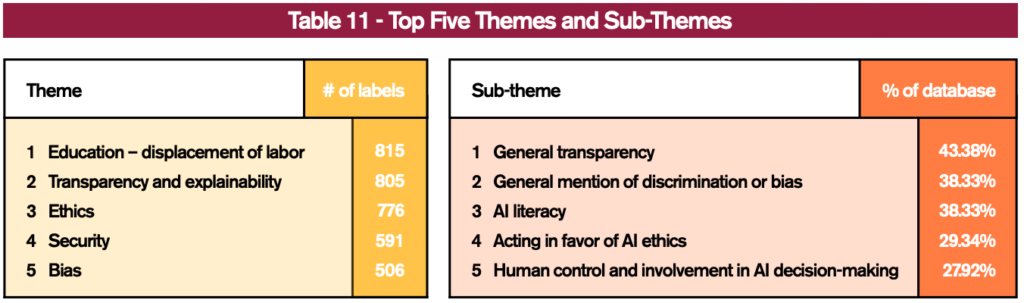

Every program’s text was classified into 15 themes and further subdivided into 78 sub-themes (see methodology section for details on how these divisions were created). Table 11 presents the top five results in both categories. It finds that education/displacement of labor is the theme with the highest number of excerpts in the database with 815. This means that text related to education/displacement of labor were found 815 times throughout the 634 soft law programs. Meanwhile, the sub-theme of general transparency appears in ~43% of programs. Readers of this section will find that each theme contains a description of the sub-theme, a table with the percentage of programs that contain each sub-theme, and representative excerpts. The database also contains the prevalence of sub-themes by type of soft law program.

2.7.1 Accountability

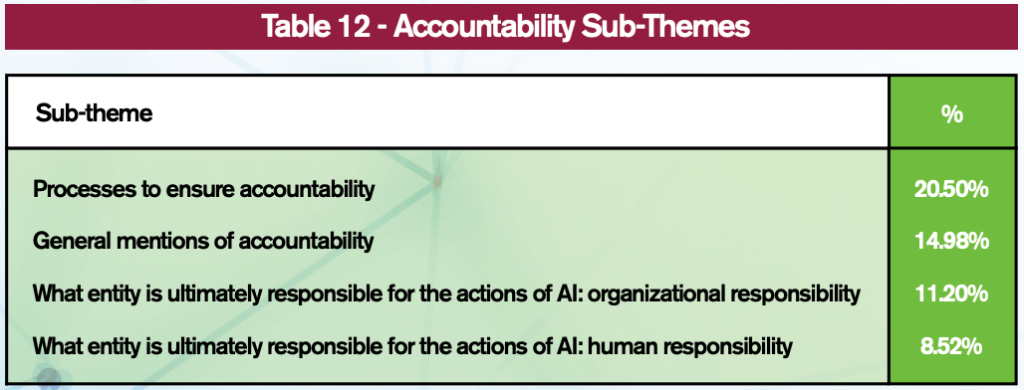

Society is gradually bestowing AI-powered systems with autonomy to make decisions affecting individuals in lethal and non-lethal ways. In this theme, readers will find language highlighting the continuum of issues related to the bearing of responsibility for the unplanned actions and accidents caused by AI systems (see Table 12).

About 15% of programs make general mention of accountability. They allude to the term, loosely define it, or state its importance to the program/society. A further ~21% recognizes the need for measures or mechanisms to ensure that accountability is considered. This is done by suggesting the creation of committees, the implementation of procedures, or anything in between. They range from general indications, such as what the Council of Europe’s Commissioner for Human Rights describes: “member states must establish clear lines of responsibility for human rights violations that may arise at various phases of an AI system lifecycle” [126] or as specific as declared by the American Civil Liberties Union regarding accountability: “an entity must maintain a system which measures compliance with these principles including an audit trail memorializing the collection, use, and sharing of information in a facial recognition system” [127].

Some programs take a position as to who is primarily responsible for an AI system’s actions. Around 11% single out organizations. They discuss the need to establish the type and extent of liability borne by firms or declare outright that legal persons should be the entities accountable for AI. This point of view is shared by the Association for Computing Machinery: “institutions should be held responsible for decisions made by the algorithms that they use, even if it is not feasible to explain in detail how the algorithms produce their results” [72].

With an opposing view, ~9% of programs affirm that humans, in the form of individual developers, operators, or decision-makers, are ultimately responsible for AI systems: “responsibility for these insights falls to humans, who must anticipate how rapidly changing AI models may perform incorrectly or be misused and protect against unethical outcomes, ideally before they occur” [128]. In between these positions, there is a 3% segment holding both parties accountable. They either differentiate the types of activities to which humans and non-humans are responsible for, assign responsibility to both, or are unsure as to which should bear the consequences:

- “Legal responsibility should be attributed to a person. The unintended nature of possible damages should not automatically exonerate manufacturers, programmers or operators from their liability and responsibility”[129]; and,

- “Institutions and decision makers that utilize AI technologies must be subject to accountability that goes beyond self-regulation”[51].

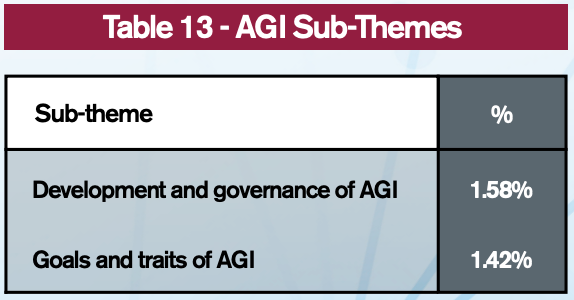

2.7.2 Artificial general intelligence

Defined as “highly autonomous systems that outperform humans at most economically valuable work”, artificial general intelligence (AGI) is the next step in this technology’s evolution [41]. Few programs spotlight AGI, which makes sense considering it is thought to be decades away from development. When discussed, ~1.4% of programs express traits desirable in such systems (see Table 13). The Chinese Academy of Sciences published a number of principles detailing the philosophy that should guide the creation of AI-based conscious beings, including: empathy, altruism, and have a sense of how to relate with current and future humans [130].

About 1.6% of programs discuss how AGI should be developed and managed by decision-makers. This includes the research agenda to be prioritized (e.g. “autonomous decomposition of difficult tasks, as well as seeking and synthesizing solutions” [120]) or what governance mechanisms ought to be implemented (e.g. “urges the Commission to exclude from EU funding companies that are researching and developing artificial consciousness” [131]).

2.7.3 Bias

AI systems inevitably perpetuate the prejudices inherited in their design or emanating from the underlying data selected for their training. Over a third of the soft law in this database recognizes bias or discrimination in a general manner by stating the term or emphasizing the importance in avoiding its occurrence.

In tackling this issue, programs take different approaches (see Table 14). In ~15% of cases, diversity is a term that represents the creation of a multidisciplinary workforce as a tool to combat the bias of AI systems: “we strive to use teams with people from diverse backgrounds to design solutions using artificial intelligence” [132] and “unless we build AI using diverse teams, data sets and design, we are at risk of repeating the inequality of previous revolutions” [133].

Meanwhile, there are programs that highlight the relevance of including populations that are generally excluded due to demographic or health characteristics (~10%): “AI should facilitate the diversity and inclusion of individuals with disabilities in the workplace” [134]. Lastly, ~16% of programs address bias by suggesting actionable mechanisms to decrease its impact: “a board should be created at EU level to monitor risks of discrimination, bias and exclusion in the use of AI systems by any organisation” [135].

2.7.4 Displacement of labor and education

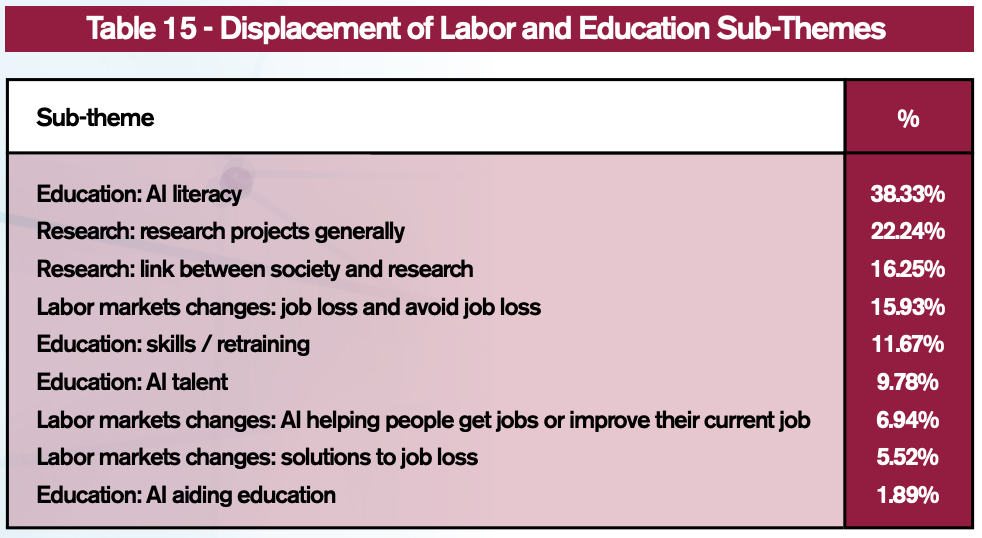

The impetus for this theme was to unearth the relationship between the labor market and AI. Closely linked to it are the educational and research initiatives highlighting alternatives to ameliorate the overarching effects of this technology on population dynamics or to improve its contributions to society. Considering this, the text herein was distributed into three groups: labor, education, and research (see Table 15).

The first group clusters the perceived consequences of AI on labor. It begins with ~16% programs that mention the possibility of job loss and the importance of avoiding it: “we have a responsibility to ensure that vulnerable workers in our supply chain are not facing significant negative impacts of AI and automation” [136] and “observe principles of fair employment and labor practices” [52]. A second group, ~6% of the sample, proposes a variety of alternatives to fight job loss, such as incentivizing communication-based activities: “all stakeholders should engage in an ongoing dialogue to determine the strategies needed to seize upon artificial intelligence’s vast socio-economic opportunities for all, while mitigating its potential negative impacts” [137]. The last label within this group, ~7% of the database, stresses the opposite of the first two, the labor efficiencies possible through AI such as: “simplifying processes and eliminating redundant work increases productivity” [138] and “accessible AI promotes growth and increased employment, and benefits society as a whole” [139].

Education is inextricably linked to preparing future generations for the demographic shifts caused by this technology. One of the most popular sub-themes in this database, appearing in ~38% of programs, remarks on the importance of providing the pedagogical and andragogical tools to facilitate AI literacy:

- “the IBM company will work to help students, workers and citizens acquire the skills and knowledge to engage safely, securely and effectively in a relationship with cognitive systems, and to perform the new kinds of work and jobs that will emerge in a cognitive economy” [78]; and,

- “update the education curriculum to refocus skills sets on AI under the umbrella of media and information literacy in preparation of the next generation of workers for AI adoption” [140].

Another section details the skills or retraining (~12%), not necessarily related to AI, that individuals whose livelihood is directly affected by this technological shift will face in order to continue earning a living: “lowskilled workers are more likely to suffer job losses…improving skills and competences is thus important to enable wider participation in the opportunities offered by new forms of work and for promoting an inclusive labour market” [141]. To complement both of these efforts, a small percentage of programs (~2%) referred to the ability of AI to aide in the provision of education: “conversational agents have huge potential to educate students…AI enhances our ability to understand the meaning of content at scale and serve it in meaningful and customized ways” [142].

While organizations await the influx of a new wave of AI literate workers, there are active efforts to recruit experts and specialists from around the world (~10%): “it is widely acknowledged that there is a skill gap in the agritech space and companies do not have time to wait for New Zealand to develop talent entirely on its own. Immigration policy should be continually monitored to allow rapid importing of the skills across the continuum to meet expected growing demand” [30].

The third grouping in this theme centers on research. All types of organizations (e.g. universities, firms, and governments) are incenting basic and applied AI research to improve their competitiveness. There are programs that describe research projects currently in progress or ideas that should be undertaken (~22%): “research Councils could support new studies investigating the consequences of deepfakes for the UK population, as well as fund research into new detection methods” [143]. The last sub-theme links research with society (~16%). Here, readers will find text on technology transfer opportunities, commercialization of AI discoveries, or partnerships with academia to bring research to the public: “DoD should advance the science and practice of VVT&E of AI systems, working in close partnership with industry and academia” [144].

2.7.5 Environment

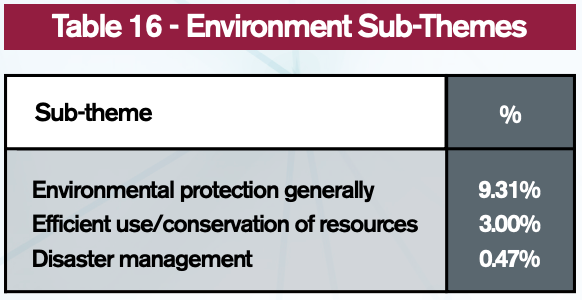

The impact of AI on the environment is not covered extensively in the database (see Table 16). Relating the technology to its planetary impact through general statements occurred in ~9% of programs. One professional association asks its members to “promote environmental sustainability both locally and globally” [43].

Specific mention of AI’s aptitudes to improve the conservation of resources through efficiencies (3%) or in disaster management scenarios (~0.5%) was even more rarely discussed: “AI can highly improve the energy sector in Mauritius namely by…using IoT and neural algorithms to increase energy efficiency” [145] and “AI can be used in many aspects of preparation for and response to natural disasters and extreme events, such as hurricane winds and storm-related flooding” [146].

2.7.6 Ethics

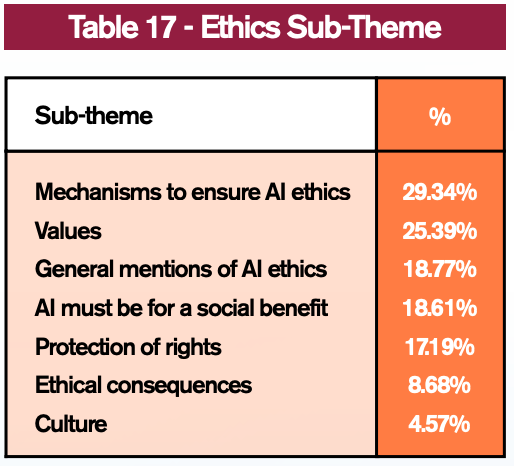

This theme exhibits the moral compass or ideals that guide how organizations employ AI (see Table 17). At the surface level, the term ethics is mentioned without offering much detail as to its meaning (~19%). A similar phenomenon occurs with values (~25%) and culture (~5%) where, in many cases, they are used broadly: “enable a kind of a ‘passport of values’ whereby systems can learn one’s personal value preferences, an important part of prosocial behavior” [147] and “the development of AI technologies and their effects must always be in accordance with current legislation and respect local cultural and social norms” [148].

Many programs expressed hopeful thoughts or commitments about the need to ensure that the technology has a positive impact on society (~19%): “data and AI should enhance societies, strengthen communities, and ameliorate the lives of vulnerable groups” [61]. Further, rights, in particular human rights, were extolled as a vital requirement to be respected by the technology (~17%): “A/IS shall be created and operated to respect, promote, and protect internationally recognized human rights” [149].

Conversely, there are programs that emphasize AI’s negative ethical consequences (~9%): “calls on the Commission to propose a framework that penalises perception manipulation practices when personalized content or news feeds lead to negative feelings and distortion of the perception of reality that might lead to negative consequences” [131]. Almost a third of programs (~29%) mention or suggest actions to ensure AI remains ethical. These include measures such as: “ban AI-enabled mass scale scoring of individuals as defined in our Ethics Guidelines” [150] and “establish a charter of ethics for Intelligent IT to minimize any potential abuse or misuse of advanced technology by presenting a clear ethical guide for developers and users alike” [151].

2.7.7 Health

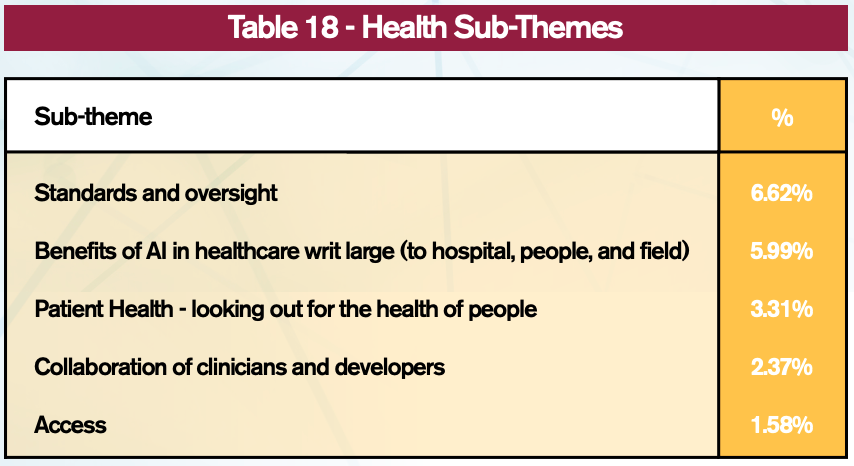

The convergence of health technologies and AI promises to deliver significant value-added to the provision of medical services (see Table 18). About 6% of the database stresses the variety of benefits possible for this application of AI. These descriptions range from general statements such as “certain medical treatments or diagnoses might be carried out better with a robot” [152] to specific advantages: “carry out large-scale genome recognition, proteomics, metabolomics, and other research and development of new drugs based on AI, promote intelligent pharmaceutical regulation” [34]. Furthermore, some programs (~3%) spotlight the health and well-being of patients as a central node in the field: “a guiding principle for both humans and health technology is that, whatever the intervention or procedure, the patient’s well-being is the primary consideration” [153].

To ensure the enduring nature of this technology’s advantages, programs stress its development, governance, and ability of individuals to access it. In terms of development, having manufacturers and clinicians work together can help ensure that AI is safely created and implemented effectively (~2%): “clinicians can and must be part of the change that will accompany the development and use of AI” [154]. Text that delves in the governance of healthcare AI attempts to verify that any device that assists in making life and death decisions does so in a manner that follows agreed upon practices or industrial standards (~7%). One standard created specifically for this purpose is aimed at helping manufacturers “through the key decisions and steps to be taken to perform a detailed risk management and usability engineering processes for medical electrical equipment or a medical electrical system, hereafter referred to as mee or mes, employing a degree of autonomy” [155]. Finally, if access to this technology is out of reach for large swaths of the population, its ability to positively contribute to society will be hampered. Statements discussing the need to make this technology available are represented in ~2% of the sample: “fair distribution of the benefits associated with robotics and affordability of homecare and healthcare robots in particular” [156].

2.7.8 Meaningful human control

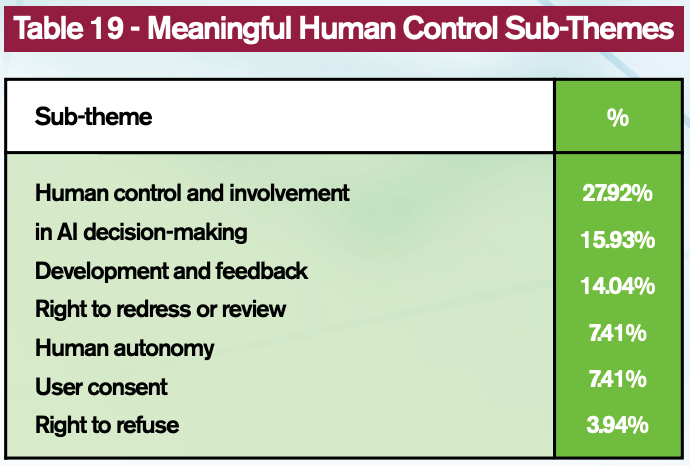

AI systems are capable of decision-making at speeds that are beyond human capabilities. This theme discloses the desire to rein-in the technology through diverse means (see Table 19). At its most basic level, it remarks that humans need to be involved in the operation of AI systems (~28%), be it through governance (“we can make sure that robot actions are designed to obey the laws humans have made” [157]) or mechanically (“we are able to deactivate and stop AI systems at any time (kill switch)” [33]).

The meaningful-human control sub-themes also describe a continuum of human participation in AI decision-making. For instance, at any time, individuals should be given the ability to opt-out of these systems (~4%), “to establish a right to be let alone, that is to say a right to refuse to be subjected to profiling” [158], or be free to make their own decisions without being nudged in a particular direction (~7%), “algorithms and automated decision-making may raise concerns over loss of self-determination and human control” [159].

Prior to the engagement of these systems, about 16% of programs discuss the need to involve stakeholders (e.g. the public and affected entities) in their development: “no jurisdiction should adopt face recognition technology without going through open, transparent, democratic processes, with adequate opportunity for genuinely representative public input and objection” [95]. While ~7% stipulate that consent of any kind should be requested from users before participating in processes that involves an AI system: “advocate for general adoption of revised forms of consent…for appropriately safeguarded secondary use of data” [128].

Subsequent to being subjected to a decision enacted by this technology, a proportion of programs (~14%) advocate for the right of individuals to seek an explanation for decisions, have these overturned, or dispute them after the fact: “make available externally visible avenues of redress for adverse individual or societal effects of an algorithmic decision system, and designate an internal role for the person who is responsible for the timely remedy of such issues” [160].

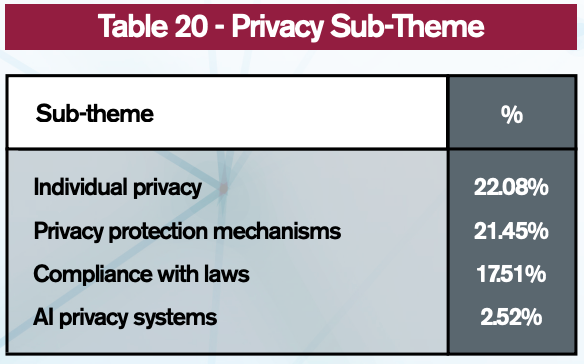

2.7.9 Privacy

Freedom from surveillance or wholesale analysis of an individual’s data exhaust is a timely subject (Table 20). This is especially the case in an era where AI applications can intrude into the public’s life in ways that no humans were ever capable of doing in the past. About 22% of programs mention the word privacy in a general manner or stress the importance of its protection: “any system, including AI systems, must ensure people’s private data is protected and kept confidential” [161].

In second place, at ~21%, programs mention systems or mechanisms that may protect user’s information: “restricting third party access unless disclosed and necessary to the original purpose or application as stated in the Purpose Specification or in response to a legal order” [162]. To complement these mechanisms, ~18% of programs discuss their compliance with regulations whose purpose is primarily to ensure privacy: “while there is no single approach to privacy, IBM complies with the data privacy laws in all countries and territories in which we operate” [163]. Lastly, a small proportion of programs (~3%) discusses harnessing AI to improve privacy practices: “technologies for cyber security and privacy protection must be advanced” [164].

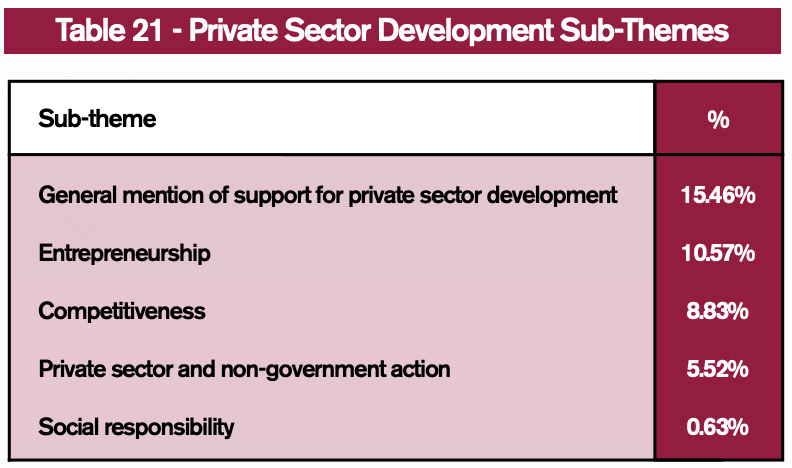

2.7.10 Private sector development

Private firms are the spearhead behind the research, development, and commercialization of most AI innovations (see Table 21). This sub-theme compiles the programs attempting to catalyze the development of the private sector, most of which (~79%) have government involvement. In fact, ~15% of programs describe, in general terms, the role of government in supporting the AI industry: “uphold open market competition to prevent monopolization of AI”[165]. Furthermore, we found programs that specifically backed efforts related to promoting the sector’s competitiveness (~9%) and entrepreneurship via small and medium businesses (~11%):

- “Sweden’s greatest opportunities for competitiveness within AI lies within a mutual interaction between innovative AI application in business and innovative organization of society” [166], and;

- “Assist SMEs to develop AI applications through AI Pilot projects, data platforms, test fields and regulatory co-creation processes” [167].

Non-government parties can also act to improve the conditions and progress of the AI sector. In ~6% of programs, firms created mechanisms such as internal governance structures, performance indicators, or strategies that recognize the potential of AI. Meanwhile, ~1% of the sample discusses attempts by private and non-government entities to align themselves with corporate social responsibility goals (e.g. creating sustainable development goals).

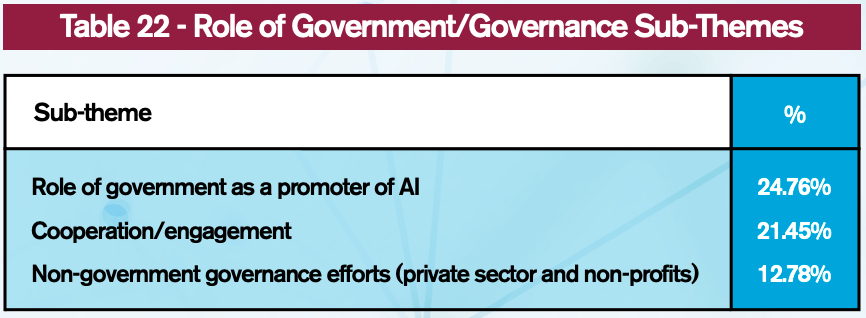

2.7.11 Role of government/governance

This theme contains text with governance efforts related to the general management of AI (see Table 22). Without specifying a particular sector, a quarter of programs reference public entities as a key promoter or arbiter of AI for example: “avoid excessive legal constraints on artificial intelligence research” [168]. Organizations outside of government, mainly private sector and non-profits, also comment on their role in working and supervising the technology (~13%): “we need both governance and technical solutions for the responsible development and use of AI” [169].

Any text that highlights public-private partnerships, creation of alliances, or participation in multilateral fora related to AI systems was classified in the cooperation between parties to govern AI sub-theme (~21%): “we encourage states to promote the worldwide application of the eleven guiding principles as affirmed by the GGE and as attached to this declaration and to work on their further elaboration and expansion” [170].

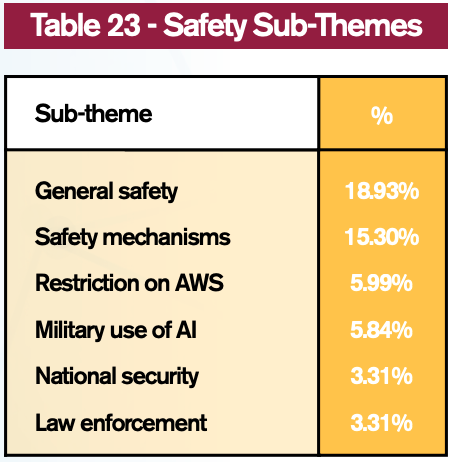

2.7.12 Safety

One of the most important debates regarding AI systems relates to their ability to cause bodily harm and how to minimize it (see Table 23). Whether it is purposefully as an autonomous weapon or as an unplanned event in the form of an accident, this sub-theme delves into how programs contend with safety issues.

The first part of this sub-theme relates to the overarching safety of AI. In this sense, around 19% of programs include normative statements on the need for the technology to be safe and avoid or minimize physical harm to people: “there is a need for a public discussion about the safety society expects from automated cars” [171]. This is followed by a discussion on the mechanisms that ought to be implemented to ensure the technology’s safety (~15%), including instituting procedures or processes, as well as standards and regulations: “all the stakeholders including industry, government agencies and civil society should deliberate to evolve guidelines for safety features for the applications in various domains” [172].

The second part of the sub-theme focuses on the weaponization of AI. Discussion of the military uses of the technology and the imposition of restrictions on autonomous weapon systems both appear in about 6% of programs:

- “Considering the increasing proliferation of autonomous systems, including among adversaries, the RNLA should continue to experiment with systems that may enhance its portfolio” [173]; and,

- “We deny that AI should be employed for safety and security applications in ways that seek to dehumanize, depersonalize, or harm our fellow human beings” [174].

The third, and last part, deliberates on AI as an information gathering technology at the national security (~3%) and the local level through law enforcement (~3%):

- “Understanding the need to protect privacy and national security, AI systems should be deployed in the most transparent manner possible” [175]; and,

- “Law enforcement needs for AI and robotics should be identified, structured, categorized and shared to facilitate development of future projects” [176].

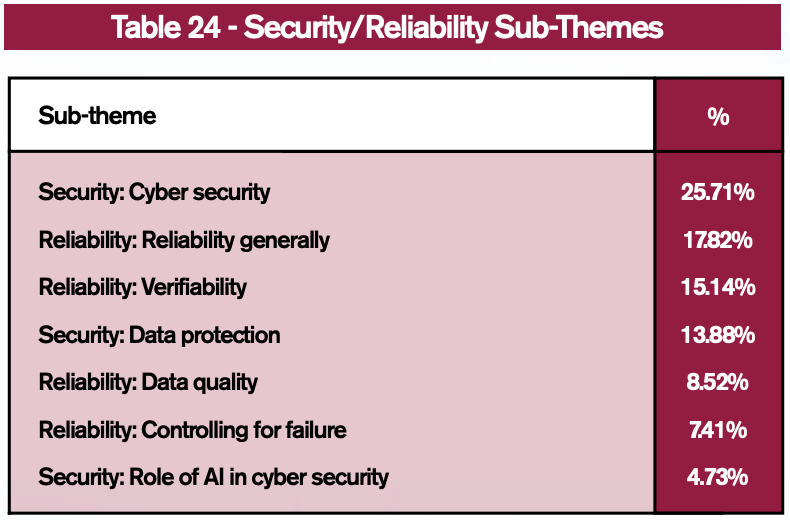

2.7.13 Security/reliability

This theme is divided into two areas relative to protecting the integrity of AI systems (security) and ensuring their optimal operation (reliability) (see Table 24). On the security side, it encompasses risks to system integrity and the mechanisms to prevent adversarial attacks of the cyber variety (~26%): “manufacturers providing vehicles and other organisations supplying parts for testing will need to ensure that all prototype automated controllers and other vehicle systems have appropriate levels of security built into them to manage any risk of unauthorised access” [177]. Within the context of security, our team added a sub-theme that targets text discussing the protection of data from third-parties (~14%): “the development of AIS must preempt the risks of user data misuse and protect the integrity and confidentiality of personal data” [178]. The last part of security entails any text discussing working with AI to thwart cyber-attacks (~5%): “by using different algorithms to parse and analyze data, machine learning empowers AI to become capable of learning and detecting patterns that would help in identifying and preventing malicious acts within the cybersecurity space” [140].

The second section of this theme concerns reliability. About 18% of programs include normative statements on reliability, interoperability, or trustworthiness of AI systems: “utilize emerging frameworks that will help ensure AI technologies are safe and reliable” [179]. In case of a system outage, ~7% of programs highlight the need for procedures to offset the failure of the technology: “organizations should ensure that reliable contingencies are in place for when AI systems fail, or to provide services to those unable to access these systems” [180]. The last two sub-themes labeled text describing factors that affect data quality (~9%) and mechanisms to confirm the functionality of an AI system (~15%):

- “Users and data providers should pay attention to the quality of data used for learning or other methods of AI systems” [181]; and,

- “Solutions should be rigorously tested for vulnerabilities and must be verified safe and protected from security threats” [182].

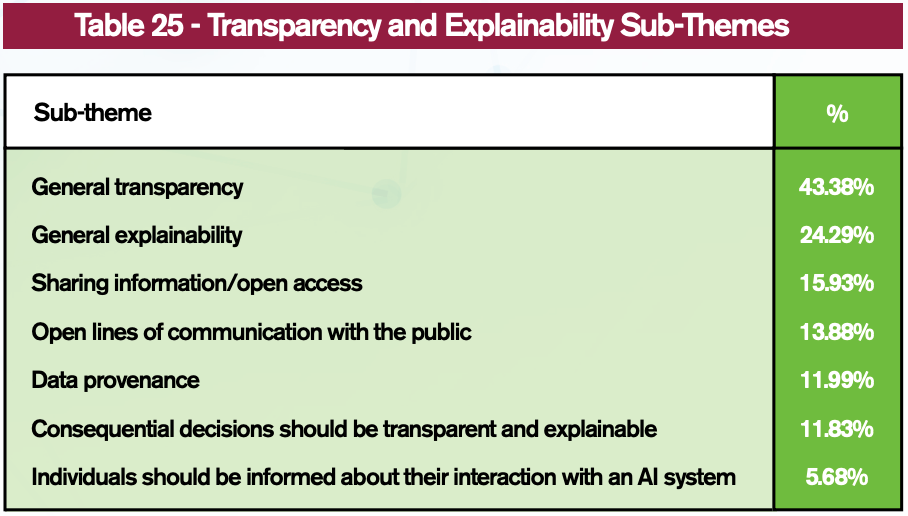

2.7.14 Transparency and explainability

This theme focuses on conveying information to stakeholders on AI systems in a manner that is understandable and clear (see Table 25). Two sub-themes labeled data on the general use of transparency (~43%) and explainability (~24%) throughout programs, the former being the most popular sub-theme of the database.

A group of sub-themes deals with the information relationship between AI systems and individuals. For instance, ~6% programs suggest that individuals should be informed about any interaction with AI: “individuals should always be aware when they are interacting with an AI system rather than a human” [183]. Another sub-theme focuses on how individuals are subjected to consequential decisions by this technology, how they ought to know of them, and receive an explanation (~12%): “data subjects… have a right to obtain information on the reasoning underlying AI data processing operations applied to them” [184].

To counter information asymmetry, one of the sub-themes highlights efforts to increase the awareness surrounding AI systems to the public or generally creating open lines of communication amongst stakeholders (~14%): “law enforcement should endeavor to completely engage in public dialogue regarding purpose-driven facial recognition use” [185]. A complementary label is applied to efforts looking to share AI-relevant databases amongst institutions (~16%): “develop shared public datasets and environments for AI training and testing” [186]. The last sub-theme in this section indicates where the data used by AI systems originated or how it is used in the training of systems (~12%): “identification of the type of biometric that is captured/stored and its relevance to the purpose for which it is being captured/store” [162].

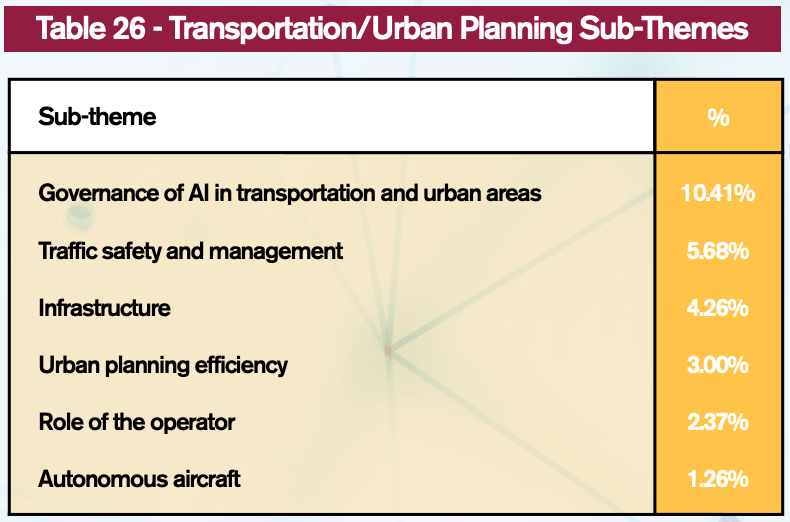

2.7.15 Transportation/urban planning

This theme guides readers through programs interested on AI applications related to transportation (land and air) and their interaction to the urban environment. Starting with the user, programs discuss how an individual controls and communicates with AI transportation systems (2%): “when the vehicle is driven by vehicle systems that do not require the driver to perform the driving task, the driver can engage in activities other than driving” [187]. The theme scales up to one application of this technology, aircraft vehicles (~1%): “develop standards and guidelines for the safety, performance, and interoperability of fully autonomous flights” [188].

The next set of sub-themes focus on the physical and non-physical support systems for AI-based transportation. Many programs discuss the infrastructure requirements needed for these applications to operate (~4%): “AI industry to work with telecommunications providers on specific needs for AI-supportive telecommunications infrastructure” [189]. Others center on the array of rules, guidelines, and regulations meant to govern their utilization (~10%): “this document establishes minimum functionality requirements that the driver can expect of the system, such as the detection of suitable parking spaces” [190]. Meanwhile, there are a number of proposals for managing traffic (~6%): “we can make mobility safer assisting human abilities and greener through platooning heavy goods vehicles to lower emissions and promoting public transport” [191]. Finally, there is an urban planning efficiency sub-theme dealing with sustainability efforts and resource management related to AI, but unrelated to traffic (3%): “AI-enabled solutions in the mobility and transportation sectors could go a long way in making cities more sustainable” [159].